Object Detection and Classification in Computer Vision

Introduction

Object detection and classification are fundamental tasks in computer vision that enable machines to recognize and categorize objects in images or videos. These technologies power applications like autonomous vehicles, facial recognition, and medical diagnostics. This blog explores the key differences between object detection and classification, discusses popular detection algorithms, and provides an implementation example.

Difference Between Object Detection and Classification

Object detection and classification are fundamental tasks in computer vision, often used together but serving different purposes. Both techniques help machines understand visual data, but they operate at different levels of analysis.

1. Object Classification

Object classification refers to the process of assigning an image or a specific region within an image to a predefined category. The goal is to determine what object is present in an image without identifying its exact location.

Key Characteristics:

- It assigns a single label to the entire image.

- It works well when only one object is present in an image.

- It does not provide location information or distinguish between multiple objects.

How It Works:

- Feature Extraction: Identifies important patterns such as edges, textures, and colors.

- Model Training: A neural network is trained to recognize these features and associate them with predefined categories.

- Prediction: The model assigns the most probable category to the given image.

2. Object Detection

Object detection extends classification by identifying multiple objects in an image and determining their locations. It involves both classification (recognizing what the object is) and localization (drawing bounding boxes around objects).

Key Characteristics:

- It detects multiple objects within the same image.

- It locates objects by drawing bounding boxes.

- It is used in applications requiring spatial awareness, such as autonomous driving and security surveillance.

How It Works:

- Region Proposal: The algorithm scans the image to find potential object regions.

- Feature Extraction: Extracts important features from each region.

- Classification & Localization: Assigns a category to each detected object and defines its position with bounding boxes.

Comparison Table

| Feature | Object Classification | Object Detection |

|---|---|---|

| Purpose | Assigns a label to an image | Identifies objects and their locations |

| Output | Single class label | Class labels with bounding boxes |

| Number of Objects | Typically one | Multiple |

| Spatial Information | No | Yes |

| Example | Classifying an image as "dog" | Detecting multiple dogs and their locations |

Popular Object Detection Algorithms

Object detection is a crucial aspect of computer vision, enabling machines to recognize and locate multiple objects in an image. Several advanced algorithms have been developed to perform this task efficiently, balancing speed and accuracy. Below are some of the most widely used object detection algorithms:

1. YOLO (You Only Look Once)

YOLO is one of the fastest object detection algorithms, making it ideal for real-time applications. Unlike traditional methods that analyze images in multiple stages, YOLO processes the entire image in a single pass, significantly improving speed.

Key Features:

- Real-time performance: YOLO can detect objects at high speeds with minimal delay.

- Single neural network architecture: The entire image is processed at once, making it more efficient.

- Grid-based detection: The image is divided into a grid, and predictions are made simultaneously for multiple regions.

- Ideal for real-world applications: Commonly used in autonomous vehicles, security surveillance, and robotics.

2. SSD (Single Shot MultiBox Detector)

SSD is another fast object detection algorithm that eliminates the need for region proposal networks, unlike R-CNN-based approaches. It uses a single deep neural network to predict multiple bounding boxes and classify objects in one step.

Key Features:

- Higher speed compared to Faster R-CNN: Can process images quickly while maintaining reasonable accuracy.

- Multi-scale feature maps: Detects objects of different sizes at various layers of the network.

- Balance between speed and accuracy: Suitable for applications requiring both performance and precision, such as mobile applications, edge computing, and drones.

3. Faster R-CNN (Region-based Convolutional Neural Network)

Faster R-CNN is one of the most accurate object detection models, but it is computationally expensive. It follows a two-stage process where it first identifies regions of interest (RoIs) and then classifies objects within those regions.

Key Features:

- Two-stage detection process: Ensures higher accuracy by first identifying object locations and then classifying them.

- Region Proposal Network (RPN): Replaces traditional selective search, improving efficiency.

- Best for high-precision tasks: Used in medical imaging, satellite image analysis, and automated inspection systems.

Comparison of Object Detection Algorithms

| Algorithm | Speed | Accuracy | Suitable for |

|---|---|---|---|

| YOLO | High | Moderate | Real-time applications (self-driving cars, surveillance) |

| SSD | High | Moderate | Mobile and embedded applications |

| Faster R-CNN | Low | High | High-precision tasks (medical imaging, research) |

How CNNs Help in Object Classification

Convolutional Neural Networks (CNNs) have revolutionized the field of computer vision by enabling machines to classify objects with high accuracy. CNNs are specifically designed to process image data by extracting hierarchical features and making predictions based on learned patterns.

1. Feature Extraction with Convolution Layers

The first step in object classification is extracting meaningful features from the image. This is done using convolution layers, which apply filters to detect various patterns such as edges, textures, and shapes.

- Each filter (or kernel) scans the image, capturing important spatial information.

- In early layers, CNNs detect simple features like edges and corners.

- As depth increases, more complex features like shapes and textures are recognized.

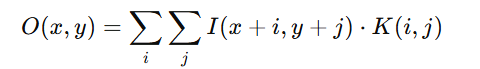

Mathematical Representation:

If an input image is represented as I(x, y) and the kernel is K(x, y), the convolution operation is performed as:

where O(x, y) is the output feature map.

2. Dimensionality Reduction with Pooling Layers

Pooling layers reduce the size of the feature maps while retaining important information. This helps in:

- Reducing computation: Smaller feature maps require fewer parameters.

- Enhancing robustness: Makes the model less sensitive to small variations in the image.

- Preventing overfitting: Generalizes well to unseen images.

Types of Pooling:

- Max Pooling: Retains the most prominent feature in a region.

- Average Pooling: Takes the average of pixel values in a region.

If a 2×2 max pooling operation is applied, it selects the highest value from each region, reducing the feature map size while preserving critical features.

3. Fully Connected Layers for Classification

After feature extraction and dimensionality reduction, the extracted features are fed into fully connected layers (also called dense layers). These layers perform:

- Flattening: Converts the 2D feature maps into a 1D vector.

- Classification: Uses weights and biases to determine the probability of each object class.

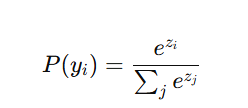

The final layer uses the Softmax activation function, which converts the outputs into probability values for each class:

where z_i represents the model’s confidence in class i.

Workflow of CNN in Object Classification

- Input Image → Preprocessed for consistency.

- Convolution Layers → Extract edges, textures, and patterns.

- Pooling Layers → Reduce feature map size and retain key features.

- Fully Connected Layers → Classify the extracted features.

- Softmax Layer → Assigns probabilities to each class.

Key takeaways

Object detection and classification are critical for various AI-driven applications. With deep learning algorithms like YOLO, SSD, and Faster R-CNN, machines can process and analyze images with remarkable accuracy. Implementing these techniques using frameworks like OpenCV and TensorFlow enables developers to build intelligent vision-based applications efficiently.

Implementation Blogs-

Implementation of Object Detection using YOLO in OpenCV

Implementation of Object Detection using SSD in OpenCV

Implementation of Faster R-CNN Using OpenCV

Next Blog- Face Recognition and Tracking

.png)

.jpg)

.jpg)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)