Generative AI: An In-Depth Exploration of GANs and Diffusion Models

Generative AI has emerged as one of the most exciting frontiers in machine learning. By enabling machines to generate new, synthetic data—whether images, videos, text, or music—Generative AI is transforming how we interact with technology, unleashing creativity, and providing solutions to complex real-world problems. Central to generative AI are Generative Adversarial Networks (GANs) and Diffusion Models, two cutting-edge techniques that have revolutionized content generation.

In this section, we will explore Generative Adversarial Networks (GANs) and Diffusion Models in detail, discussing their inner workings, applications, strengths, limitations, and the challenges they face in real-world implementations.

Generative Adversarial Networks (GANs)

Introduction to GANs

Generative Adversarial Networks (GANs) were introduced by Ian Goodfellow and his team in 2014 as a groundbreaking approach to generative modeling. The main goal of GANs is to train a model capable of generating data that resembles real-world data. GANs are particularly useful for generating realistic images, videos, and even audio, based on the patterns and features learned from existing datasets.

A GAN consists of two key components:

- Generator (G): The Generator network creates synthetic data (e.g., images, audio) from random noise or latent space. It learns to transform random vectors into data that looks like real data.

- Discriminator (D): The Discriminator network evaluates the authenticity of the generated data by distinguishing between real data and the fake data generated by the Generator. Its job is to classify data as either "real" (from the training set) or "fake" (generated by the Generator).

These two components are engaged in a game-theoretic scenario: The Generator tries to deceive the Discriminator into thinking the generated data is real, while the Discriminator strives to distinguish between real and fake data. Over time, both networks improve their performance, and eventually, the Generator produces high-quality, realistic data.

The GAN Training Process

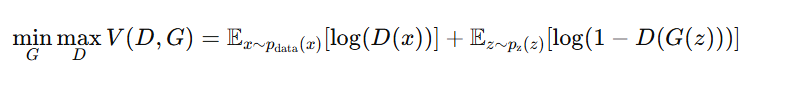

The training of GANs is inherently adversarial, where the Generator and the Discriminator are trained simultaneously through a process called minimax optimization. The objective is to find the optimal set of weights for both the Generator and the Discriminator.

Mathematically, the training process can be framed as a minimax game between the Generator and the Discriminator:

- The Generator seeks to minimize the probability that the Discriminator correctly identifies generated data as fake.

- The Discriminator aims to maximize its ability to correctly classify data as real or fake.

This leads to the following optimization problem:

Where:

- G(z) is the data generated by the Generator.

- D(x) is the probability that x is real, according to the Discriminator.

- pdata is the distribution of real data, and pz is the distribution of random noise.

This game continues until the Generator produces data that is indistinguishable from real data, and the Discriminator cannot reliably classify the generated data as fake.

Types of GANs

- Vanilla GANs: These are the basic GANs, where both the Generator and the Discriminator are simple neural networks. While effective, they are prone to training instability.

- Deep Convolutional GANs (DCGANs): DCGANs use convolutional layers in both the Generator and Discriminator, making them more effective for generating high-quality images, as they are able to capture spatial hierarchies and patterns.

- Conditional GANs (CGANs): CGANs introduce additional information, or conditions, such as labels or attributes, to the GANs, enabling the generation of specific types of data (e.g., generating images of a particular object or category).

- Wasserstein GANs (WGANs): WGANs address the training instability issue in traditional GANs by using a new loss function that is based on the Wasserstein distance, making the training process smoother and more stable.

- CycleGANs: CycleGANs are designed for unsupervised image-to-image translation tasks, such as converting a photo into a painting or transferring an image from one domain to another (e.g., winter to summer photos). They do not require paired training data, unlike traditional GANs.

Applications of GANs

GANs have found widespread applications in various fields due to their ability to generate high-quality synthetic data:

- Image Generation: GANs are extensively used for generating realistic images of people, objects, and scenes, and they have been employed in systems such as deepfakes.

- Image Editing: GANs allow users to edit images by removing or altering certain features, such as changing facial expressions, adding objects to images, or superimposing elements like text or logos.

- Data Augmentation: GANs can generate additional training data for machine learning models, which is especially useful in fields like healthcare, where labeled data can be scarce.

- Style Transfer: GANs are used for transferring the artistic style of one image to another, such as turning a photograph into a painting in the style of Van Gogh or Picasso.

- Super-Resolution: GANs are used to improve the quality of low-resolution images, making them suitable for applications like medical imaging or satellite photography.

Diffusion Models: A New Frontier in Generative AI

While GANs have been widely successful in various applications, Diffusion Models have recently gained prominence as a powerful alternative for generative modeling, especially in image generation. Diffusion models have proven to be more stable and effective at producing high-quality results, with fewer issues like mode collapse, which can plague GANs.

What Are Diffusion Models?

Diffusion models are a class of generative models that simulate a process where data (e.g., an image) is progressively noised over time until it becomes pure noise. Then, the model learns how to reverse this noising process, gradually denoising the data to generate new, synthetic samples.

The process is broken down into two phases:

- Forward Diffusion Process (Noise Addition): In this step, noise is gradually added to the data over several timesteps. This process is designed to irreversibly destroy the data's structure until it becomes indistinguishable from random noise.

- Reverse Diffusion Process (Denoising): After the forward process, the model is trained to reverse the noise addition and restore the data to its original form. This reverse process can be seen as denoising the data step-by-step, where each step refines the data closer to its original state.

How Diffusion Models Work

In more technical terms, a diffusion model defines a probabilistic transition from the real data to noise and learns to reverse this transition. This can be understood as a series of denoising autoencoders trained on noisy data to progressively recover the clean data.

- Training Phase: The model is trained to predict the noise at each step of the forward diffusion process. By learning how to remove noise from each stage, it learns how to reverse the diffusion process during sampling.

- Sampling Phase: Once trained, new data is generated by starting with pure noise and iteratively applying the learned reverse process to transform the noise into a realistic sample (e.g., a photo, a piece of music, or text).

Advantages of Diffusion Models

- Stable Training: Unlike GANs, which suffer from training instability, diffusion models tend to be more stable to train, with fewer risks of issues like mode collapse.

- Better Coverage of Data Distribution: Diffusion models are able to explore the entire data distribution, whereas GANs might miss certain modes of the data.

- High-Quality Generation: Diffusion models generate high-quality samples with fine-grained details. They have shown remarkable success in tasks such as image generation and denoising.

Applications of Diffusion Models

- Image Generation: Diffusion models like DALL·E 2 and Stable Diffusion have become popular for generating high-quality images from textual descriptions. These models generate coherent, detailed images that match user-provided prompts.

- Text-to-Image Generation: By pairing text descriptions with images, diffusion models can generate images that align with detailed textual input, making them useful for creative applications such as advertising and concept art.

- Super-Resolution: Just like GANs, diffusion models can be used for enhancing the resolution of images, turning blurry or pixelated images into high-definition versions.

- Audio and Speech Generation: Diffusion models are also being applied to the domain of audio generation, where they can be used to generate high-quality, realistic audio such as music or human speech.

Challenges and Future Directions

While GANs and Diffusion Models are powerful tools for generative modeling, both face certain challenges:

Challenges in GANs

- Training Instability: GANs are difficult to train due to issues like mode collapse, vanishing gradients, and non-convergence. This makes it challenging to ensure the Generator and Discriminator converge at the same time.

- Evaluation: Measuring the quality of generated data is subjective and difficult. While some evaluation metrics like Fréchet Inception Distance (FID) exist, they don’t always correlate with human judgment.

- Lack of Control: GANs struggle with providing fine-grained control over generated content. For

instance, controlling the style or structure of an image can be difficult.

Challenges in Diffusion Models

- Computational Complexity: Diffusion models require multiple timesteps to generate data, which can lead to longer generation times compared to GANs. This makes them more computationally expensive.

- Scaling: Although diffusion models perform well in generating high-quality samples, they may face challenges when scaled to larger datasets or more complex applications.

Future Directions

- Improved Architectures: Research is ongoing into improving the architectures of GANs and Diffusion Models to make them more efficient and capable of generating even more complex data.

- Hybrid Approaches: Combining the strengths of both GANs and Diffusion Models could result in new hybrid approaches that benefit from the stability of diffusion models and the quality of GANs.

- Multi-modal Models: Future research may focus on creating multi-modal generative models that can generate data across different modalities (e.g., text, images, video, and audio) based on a unified framework.

In conclusion, both GANs and Diffusion Models represent cutting-edge techniques in Generative AI, each with its unique strengths and applications. As research progresses, these models are expected to evolve further, offering even more exciting possibilities for content generation, creative applications, and problem-solving in a variety of fields.

.jpg)

.jpg)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)