AdaBoost: A Powerful Boosting Algorithm

Introduction

Adaptive Boosting, commonly known as AdaBoost, is a machine learning algorithm that enhances the performance of weak classifiers by sequentially improving their accuracy. It belongs to the family of boosting methods and is widely used in classification tasks.

How AdaBoost Works – Step-by-Step Explanation

AdaBoost (Adaptive Boosting) is an ensemble learning technique that builds a strong classifier by combining multiple weak learners (typically decision stumps). Unlike traditional models that treat all data points equally, AdaBoost focuses more on difficult cases by adjusting weights after each iteration. Here’s how it works:

Initialize Weights

- Each training sample is given equal importance (weight) initially.

- If there are N training samples, each gets a weight of 1/N.

- These weights determine how much influence a sample has in training the weak learner.

Train a Weak Learner

- A weak learner (usually a decision stump, i.e., a one-level decision tree) is trained on the dataset.

- The classifier tries to separate the data based on a simple rule (e.g., "If X > 5, predict A; otherwise, predict B").

Calculate Errors

- The weak model makes predictions on the training data.

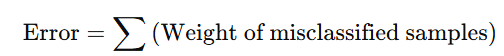

The error rate is calculated:

- If the model performs well, its error is low; if it struggles, the error is high.

Update Weights

- Misclassified samples get higher weights, meaning they will have more influence in the next iteration.

- Correctly classified samples get lower weights, so the model pays less attention to them.

- The goal is to make the model focus on hard-to-classify examples in subsequent iterations.

Repeat the Process

- Steps 2 to 4 are repeated for a predefined number of iterations or until the model reaches a desired accuracy.

- Each new weak learner is trained on the updated weighted dataset (i.e., giving more importance to the previously misclassified samples).

Final Prediction

- All weak learners are combined using a weighted majority vote.

- A model with lower error is assigned higher importance in the final decision.

- The final strong classifier is a weighted sum of all the weak classifiers’ predictions.

Example in Simple Terms

- Imagine a teacher is training students for an exam:

- Initially, all topics get equal focus (weights).

- After a test, the teacher identifies difficult topics (misclassified samples).

- In the next class, more attention is given to those difficult topics (weight adjustment).

- The process is repeated multiple times, gradually improving overall understanding.

- Finally, students perform well in all topics—just like AdaBoost improves accuracy with every iteration.

Advantages of AdaBoost

- Improves Weak Learners: Turns weak classifiers into a strong predictive model.

- High Accuracy: Often outperforms individual classifiers by reducing bias.

- Feature Selection: Can automatically highlight important features in the data.

- Versatile: Works well with various classifiers like decision trees, SVMs, and neural networks.

Disadvantages of AdaBoost

- Sensitive to Noisy Data: Outliers and mislabeled data can heavily impact performance.

- Overfitting Risk: Too many iterations can lead to overfitting on training data.

- Computational Complexity: Requires multiple training cycles, increasing processing time.

Hyperparameter Tuning for AdaBoost

To optimize AdaBoost performance, key hyperparameters include:

- Number of Estimators (n_estimators): Defines the number of weak learners to train.

- Learning Rate: Controls the contribution of each weak learner.

- Base Estimator: Specifies the type of weak classifier used (e.g., decision stump).

- Algorithm Type: Options include SAMME (for multiclass problems) and SAMME.R (for real-valued outputs).

Applications of AdaBoost

1. Face Detection

- Widely used in computer vision for detecting human faces in images.

2. Text Classification

- Applied in spam filtering and sentiment analysis.

3. Medical Diagnosis

- Helps in identifying diseases from medical data.

4. Fraud Detection

- Used in financial institutions to detect fraudulent transactions.

Key Takeaways

- AdaBoost enhances weak learners to create a strong classifier.

- It sequentially adjusts weights to focus on misclassified instances.

- Effective for classification but sensitive to noise and outliers.

- Commonly used in face detection, spam filtering, and fraud detection.

- Hyperparameter tuning can improve performance and prevent overfitting.

Next Blog- Step-wise Python Implementation of AdaBoost

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)