Model Monitoring and Maintenance

After deploying a machine learning model, continuous monitoring and maintenance are essential to ensure that the model remains accurate, reliable, and efficient. Models can degrade over time due to changing data patterns, known as concept drift, or due to system failures.

a) Performance Tracking

Tracking model performance helps identify any degradation in accuracy, precision, recall, or other key metrics. This is done by logging predictions and evaluating the model's accuracy over time.

1. Logging Predictions and Accuracy Trends

- Keep a record of model predictions and compare them with actual outcomes.

- Track performance metrics like accuracy, precision, recall, F1-score, and Mean Squared Error (MSE).

- Maintain logs to analyze trends and detect performance drops.

2. Tools for Performance Tracking

Several tools help automate logging and visualization of model performance:

- MLflow – Tracks experiments, model parameters, and versioning.

- TensorBoard – Visualizes deep learning models, training loss, and accuracy trends.

- Prometheus – Monitors real-time data, collects time-series metrics, and sends alerts.

Example: Logging predictions with MLflow

import mlflow

mlflow.start_run()

mlflow.log_metric("accuracy", 0.92)

mlflow.log_param("learning_rate", 0.01)

mlflow.end_run()Example: Tracking Training Performance with TensorBoard

import tensorflow as tf

# Load dataset

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# Normalize data

x_train, x_test = x_train / 255.0, x_test / 255.0

# Create model

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation="relu"),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation="softmax")

])

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

# Initialize TensorBoard

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir="./logs")

# Train model with TensorBoard logging

model.fit(x_train, y_train, epochs=5, validation_data=(x_test, y_test), callbacks=[tensorboard_callback])

Run TensorBoard in Terminal

tensorboard --logdir=./logs

Now, open http://localhost:6006/ in your browser to see the live visualization of training progress.

📌 TensorBoard helps analyze model training, hyperparameter tuning, and debugging in deep learning projects.

Example: Monitoring an ML Model with Prometheus

Step 1: Install Prometheus

Download and extract Prometheus:

wget https://github.com/prometheus/prometheus/releases/download/v2.35.0/prometheus-2.35.0.linux-amd64.tar.gz

tar xvfz prometheus-2.35.0.linux-amd64.tar.gz

cd prometheus-2.35.0.linux-amd64/

Step 2: Define Model Metrics in Python

from prometheus_client import start_http_server, Summary

import random

import time

# Create a metric to track inference latency

REQUEST_TIME = Summary("inference_latency_seconds", "Time spent making predictions")

@REQUEST_TIME.time()

def predict():

time.sleep(random.uniform(0.1, 0.5)) # Simulate model processing time

return random.choice(["Class A", "Class B", "Class C"])

# Start Prometheus server

if __name__ == "__main__":

start_http_server(8000) # Prometheus scrapes metrics from this port

while True:

predict()

Step 3: Configure Prometheus to Scrape Metrics

Edit the prometheus.yml file:

scrape_configs:

- job_name: "ml_model"

static_configs:

- targets: ["localhost:8000"]

Step 4: Run Prometheus

./prometheus --config.file=prometheus.yml

Now, visit http://localhost:9090 to query and analyze model performance in real-time.

📌 Prometheus ensures that ML models are running efficiently, alerting users when accuracy drops or inference time increases.

b) Handling Concept Drift

1. What is Concept Drift?

Concept drift occurs when the statistical properties of the input data change over time, causing the model to become less effective. For example:

- A fraud detection model trained on past transactions might struggle with new fraudulent techniques.

- A stock price prediction model may lose accuracy due to market shifts.

2. Detecting Concept Drift

- Statistical Tests – Compare new data distributions with training data.

- Kolmogorov-Smirnov Test – Checks if the distribution of new data significantly differs from old data.

- Population Stability Index (PSI) – Measures how much a variable's distribution has changed.

Example: Using Kolmogorov-Smirnov test

from scipy.stats import ks_2samp

# Compare old vs new data distributions

stat, p_value = ks_2samp(old_data, new_data)

if p_value < 0.05:

print("Concept drift detected! Model retraining needed.")

Example: Using Population Stability Index (PSI) for Concept Drift Detection

The Population Stability Index (PSI) compares the distribution of a variable between two time periods and determines if a significant shift has occurred.

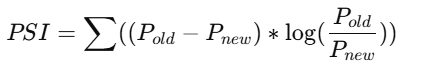

PSI Formula

Where:

🔹 Pold = proportion of observations in a bin for the old dataset

🔹 Pnew = proportion of observations in a bin for the new dataset

PSI Interpretation

🔹 PSI < 0.1 → No significant change

🔹 0.1 ≤ PSI < 0.25 → Moderate change, needs monitoring

🔹 PSI ≥ 0.25 → Significant drift detected! Retraining required

Python Implementation of PSI Calculation

import numpy as np

def calculate_psi(expected, actual, bins=10):

"""

Calculates Population Stability Index (PSI)

expected: Old dataset (reference)

actual: New dataset (incoming data)

bins: Number of bins to divide data

"""

# Create bins based on old data distribution

breakpoints = np.linspace(np.min(expected), np.max(expected), bins + 1)

# Get bin counts for old and new data

expected_counts, _ = np.histogram(expected, bins=breakpoints)

actual_counts, _ = np.histogram(actual, bins=breakpoints)

# Convert counts to proportions

expected_perc = expected_counts / np.sum(expected_counts)

actual_perc = actual_counts / np.sum(actual_counts)

# Replace 0s with small values to avoid division errors

expected_perc = np.where(expected_perc == 0, 0.0001, expected_perc)

actual_perc = np.where(actual_perc == 0, 0.0001, actual_perc)

# Compute PSI score

psi_values = (expected_perc - actual_perc) * np.log(expected_perc / actual_perc)

psi_score = np.sum(psi_values)

return psi_score

# Example Data: Old vs New Feature Distribution

old_data = np.random.normal(50, 10, 1000) # Old data

new_data = np.random.normal(55, 12, 1000) # New data with drift

# Calculate PSI

psi_score = calculate_psi(old_data, new_data)

# Output PSI result

print(f"PSI Score: {psi_score}")

if psi_score >= 0.25:

print("Significant concept drift detected! Model retraining needed.")

elif psi_score >= 0.1:

print("Moderate drift detected. Monitor the model.")

else:

print("No significant drift. Model is stable.")

3. Retraining the Model

- Periodically retrain the model with fresh data.

- Use automated pipelines to schedule retraining at regular intervals.

- Implement online learning where the model continuously learns from new data.

Example: Auto-retraining with scikit-learn

from sklearn.model_selection import train_test_split

# Train a new model with updated data

X_train, X_test, y_train, y_test = train_test_split(new_data, labels, test_size=0.2)

model.fit(X_train, y_train)Automating Model Retraining with Pipelines

Instead of manually retraining, automated pipelines streamline the process.

Tools for Automated Retraining

- Apache Airflow – Orchestrates end-to-end retraining workflows.

- Kubeflow Pipelines – Automates ML model training in cloud environments.

- MLflow – Tracks model versions and automates deployment.

Example: Using Airflow for Scheduled Model Retraining

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

import train_model # A script that retrains the model

default_args = {

"owner": "ML Team",

"start_date": datetime(2024, 2, 1),

"schedule_interval": "@weekly", # Retrains the model weekly

}

dag = DAG("model_retraining", default_args=default_args, catchup=False)

train_task = PythonOperator(

task_id="retrain_model",

python_callable=train_model.run, # Calls the function that trains the model

dag=dag,

)

c) Implementing Alerting Systems

1. Triggering Alerts for Anomalies

Anomalies in predictions (such as a sudden drop in accuracy or unexpected outputs) must trigger alerts so that issues can be addressed.

- Set accuracy thresholds – If accuracy drops below a certain level, trigger an alert.

- Detect outliers – Flag unexpected predictions.

- Use monitoring dashboards – Send alerts via Slack, email, or SMS.

Example: Setting an alert if accuracy falls below a threshold

accuracy = 0.75 # Current model accuracy

threshold = 0.80

if accuracy < threshold:

print("Warning: Model accuracy has dropped! Consider retraining.")

Example: Detecting Outliers in Predictions

If a model starts producing outlier predictions, it could indicate concept drift or incorrect inputs. We can use Z-score detection to flag anomalies.

import numpy as np

from scipy.stats import zscore

# Sample predictions

predictions = np.array([50, 52, 49, 51, 500]) # Last value is an anomaly

# Calculate Z-scores (how far each value is from the mean)

z_scores = np.abs(zscore(predictions))

# Flag values where Z-score > 3 (typically considered an outlier)

anomalies = predictions[z_scores > 3]

if len(anomalies) > 0:

print(f"Anomalies detected in predictions: {anomalies}")

Use case: Fraud detection, financial models, or any scenario where unexpected predictions must be flagged.

Example: Sending Alerts via Slack for Model Performance Issues

To automate alerts, we can send messages to Slack, Email, or SMS whenever an issue occurs. Here’s how to send a Slack alert when model accuracy drops:

import requests

def send_slack_alert(message):

webhook_url = "https://hooks.slack.com/services/your/webhook/url" # Replace with actual Slack webhook URL

payload = {"text": message}

requests.post(webhook_url, json=payload)

# Check model accuracy and send alert

accuracy = 0.75

threshold = 0.80

if accuracy < threshold:

send_slack_alert(f"🚨 Model accuracy has dropped to {accuracy:.2f}. Consider retraining! 🚨")

Use case: Automated notifications for real-time monitoring systems.

2. Deploying Fail-Safe Mechanisms

- Rollback to previous model versions – If the new model underperforms, revert to an earlier version.

- Shadow Deployment – Test the new model alongside the old one before fully switching.

- A/B Testing – Deploy two models and compare results before selecting the best one.

Example: Using MLflow for model versioning

import mlflow

# Load a previous version of the model

model = mlflow.pyfunc.load_model("models:/fraud_detection/Production")Example: Shadow Deployment with FastAPI

Shadow deployment allows testing a new model without affecting users. The new model runs in parallel, but its predictions are logged instead of used.

from fastapi import FastAPI

import joblib

import numpy as np

app = FastAPI()

# Load old and new models

old_model = joblib.load("old_model.joblib")

new_model = joblib.load("new_model.joblib")

@app.post("/predict/")

def predict(data: list):

input_data = np.array(data).reshape(1, -1)

# Primary prediction (serves users)

old_prediction = old_model.predict(input_data)

# Shadow model prediction (logged for analysis)

new_prediction = new_model.predict(input_data)

print(f"Shadow Model Prediction: {new_prediction}") # Log results for comparison

return {"prediction": old_prediction.tolist()}

✅ Use case: Ensures the new model works correctly before full deployment.

Example: A/B Testing Using Flask

A/B testing deploys two versions of the model and randomly assigns users to test performance.

from flask import Flask, request, jsonify

import joblib

import random

import numpy as np

app = Flask(__name__)

# Load models

model_A = joblib.load("model_A.joblib")

model_B = joblib.load("model_B.joblib")

@app.route('/predict', methods=['POST'])

def predict():

data = np.array(request.json['data']).reshape(1, -1)

# Randomly assign users to a model

chosen_model = model_A if random.random() < 0.5 else model_B

prediction = chosen_model.predict(data)

return jsonify({"model_used": "A" if chosen_model == model_A else "B", "prediction": prediction.tolist()})

if __name__ == '__main__':

app.run(debug=True)

✅ Use case: Helps select the best-performing model before full deployment.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)