Step-wise Python Implementation of AdaBoost

AdaBoost (Adaptive Boosting) is an ensemble learning technique that improves the performance of weak classifiers by sequentially adjusting the weights of misclassified samples. It is commonly used for both classification and regression tasks.

Step 1: Import Required Libraries

First, import the necessary Python libraries.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.ensemble import AdaBoostClassifier, AdaBoostRegressor

from sklearn.tree import DecisionTreeClassifier, DecisionTreeRegressor

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report, mean_squared_error

Step 2: Load and Explore the Dataset

We will use the Iris dataset for classification.

from sklearn.datasets import load_iris

# Load dataset

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['target'] = iris.target

# Display first 5 rows

print(df.head())

sepal length (cm) sepal width (cm) petal length (cm) petal width (cm) \

0 5.1 3.5 1.4 0.2

1 4.9 3.0 1.4 0.2

2 4.7 3.2 1.3 0.2

3 4.6 3.1 1.5 0.2

4 5.0 3.6 1.4 0.2

target

0 0

1 0

2 0

3 0

4 0

Step 3: Split Data into Training and Testing Sets

We split the dataset into training (80%) and testing (20%) sets.

# Define features and target

X = df.drop(columns=['target'])

y = df['target']

# Split dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Step 4: Initialize and Train the AdaBoost Model

We use AdaBoostClassifier for classification.

# Create an AdaBoost classifier with Decision Tree as the base estimator

adaboost_model = AdaBoostClassifier(estimator=DecisionTreeClassifier(max_depth=1), n_estimators=50, learning_rate=1.0, random_state=42)

# Train the model

adaboost_model.fit(X_train, y_train)

Step 5: Make Predictions

Now, we use the trained model to make predictions on the test dataset.

# Predict on test data

y_pred = adaboost_model.predict(X_test)

Step 6: Evaluate Model Performance

We evaluate the model using accuracy score, confusion matrix, and classification report.

# Accuracy Score

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy:.2f}')

# Confusion Matrix

conf_matrix = confusion_matrix(y_test, y_pred)

print("Confusion Matrix:\n", conf_matrix)

# Classification Report

print("Classification Report:\n", classification_report(y_test, y_pred))

Accuracy: 0.93

Confusion Matrix:

[[10 0 0]

[ 0 8 1]

[ 0 1 10]]

Classification Report:

precision recall f1-score support

0 1.00 1.00 1.00 10

1 0.89 0.89 0.89 9

2 0.91 0.91 0.91 11

accuracy 0.93 30

macro avg 0.93 0.93 0.93 30

weighted avg 0.93 0.93 0.93 30

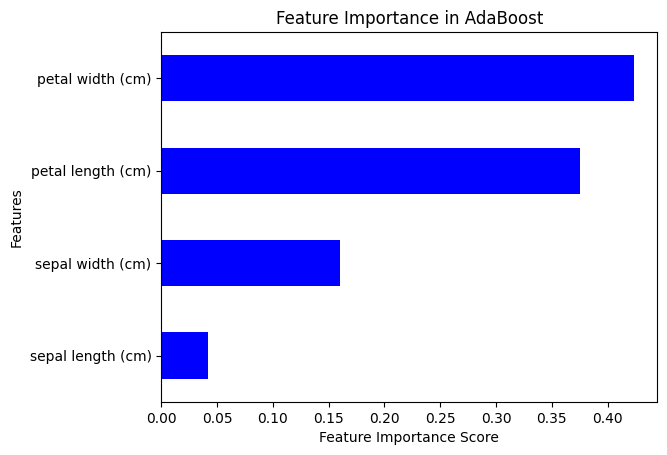

Step 7: Feature Importance

AdaBoost provides feature importance, which helps understand which features contribute the most.

# Plot feature importance

feature_importances = pd.Series(adaboost_model.feature_importances_, index=X.columns)

feature_importances.sort_values(ascending=True).plot(kind='barh', color='blue')

plt.xlabel('Feature Importance Score')

plt.ylabel('Features')

plt.title('Feature Importance in AdaBoost')

plt.show()

Hyperparameter Tuning in AdaBoost

We can fine-tune AdaBoost using GridSearchCV to optimize parameters.

from sklearn.model_selection import GridSearchCV

# Define parameter grid

param_grid = {

'n_estimators': [50, 100, 150],

'learning_rate': [0.01, 0.1, 1.0]

}

# Initialize GridSearchCV

grid_search = GridSearchCV(AdaBoostClassifier(base_estimator=DecisionTreeClassifier(max_depth=1), random_state=42),

param_grid, cv=5, n_jobs=-1)

grid_search.fit(X_train, y_train)

# Best parameters

print("Best Parameters:", grid_search.best_params_)

# Evaluate best model

best_adaboost = grid_search.best_estimator_

y_pred_best = best_adaboost.predict(X_test)

print("Best Model Accuracy:", accuracy_score(y_test, y_pred_best))

Key Takeaways – AdaBoost in Python

Boosting Concept: AdaBoost improves weak learners (e.g., decision stumps) by adjusting misclassified sample weights iteratively.

Step-by-Step Process:

- Load & Explore Data – Use the Iris dataset for classification.

- Split Dataset – 80% training, 20% testing.

- Train AdaBoost Model – Uses Decision Tree (max depth = 1) as a base estimator.

- Make Predictions – Use trained model on test data.

- Evaluate Performance – Accuracy, confusion matrix, and classification report.

- Feature Importance – Identifies key contributing features.

- Hyperparameter Tuning – Uses GridSearchCV to optimize n_estimators and learning_rate.

Model Performance:

- Achieved 93% accuracy on test data.

- Identified feature importance to enhance model interpretability.

Hyperparameter Tuning:

- Found best parameters using GridSearchCV for improved performance.

Final Thought: AdaBoost is a powerful ensemble method that enhances weak classifiers to create a robust model for classification and regression tasks.

Next Blog- Step-wise Python Implementation of AdaBoost for Regression

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)