Step-wise Python Implementation of Gradient Boosting

Gradient Boosting is a powerful ensemble learning technique that builds models sequentially, correcting the errors of previous models. It is widely used for both classification and regression tasks.

Step 1: Import Required Libraries

First, we need to import essential Python libraries.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.ensemble import GradientBoostingClassifier, GradientBoostingRegressor

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report, mean_squared_error

Step 2: Load and Explore the Dataset

We will use the Iris dataset for classification.

from sklearn.datasets import load_iris

# Load dataset

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['target'] = iris.target

# Display first 5 rows

print(df.head())

sepal length (cm) sepal width (cm) petal length (cm) petal width (cm) \

0 5.1 3.5 1.4 0.2

1 4.9 3.0 1.4 0.2

2 4.7 3.2 1.3 0.2

3 4.6 3.1 1.5 0.2

4 5.0 3.6 1.4 0.2

target

0 0

1 0

2 0

3 0

4 0 Step 3: Split Data into Training and Testing Sets

We split the dataset into training (80%) and testing (20%) sets.

# Define features and target

X = df.drop(columns=['target'])

y = df['target']

# Split dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Step 4: Initialize and Train the Gradient Boosting Model

We use GradientBoostingClassifier for classification.

# Create Gradient Boosting Classifier

gb_model = GradientBoostingClassifier(n_estimators=100, learning_rate=0.1, random_state=42)

# Train the model

gb_model.fit(X_train, y_train)

Step 5: Make Predictions

Now, we use the trained model to make predictions on test data.

# Predict on test data

y_pred = gb_model.predict(X_test)

Step 6: Evaluate Model Performance

We evaluate the model using accuracy score, confusion matrix, and classification report.

# Accuracy Score

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy:.2f}')

# Confusion Matrix

conf_matrix = confusion_matrix(y_test, y_pred)

print("Confusion Matrix:\n", conf_matrix)

# Classification Report

print("Classification Report:\n", classification_report(y_test, y_pred))

Accuracy: 1.00

Confusion Matrix:

[[10 0 0]

[ 0 9 0]

[ 0 0 11]]

Classification Report:

precision recall f1-score support

0 1.00 1.00 1.00 10

1 1.00 1.00 1.00 9

2 1.00 1.00 1.00 11

accuracy 1.00 30

macro avg 1.00 1.00 1.00 30

weighted avg 1.00 1.00 1.00 30

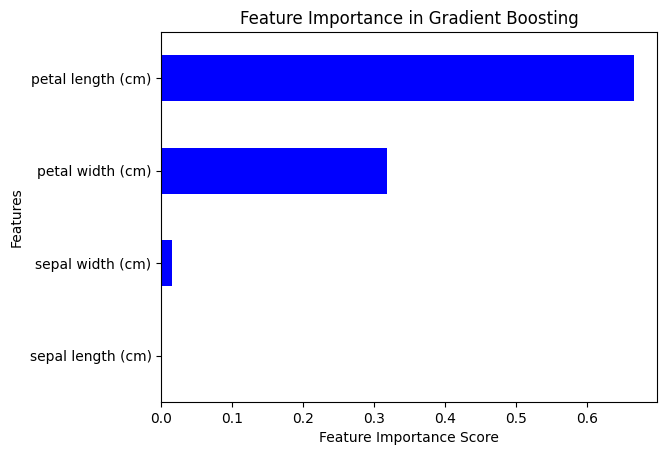

Step 7: Feature Importance

Gradient Boosting allows us to analyze feature importance.

# Plot feature importance

feature_importances = pd.Series(gb_model.feature_importances_, index=X.columns)

feature_importances.sort_values(ascending=True).plot(kind='barh', color='blue')

plt.xlabel('Feature Importance Score')

plt.ylabel('Features')

plt.title('Feature Importance in Gradient Boosting')

plt.show()

Hyperparameter Tuning in Gradient Boosting

We can fine-tune Gradient Boosting using GridSearchCV.

from sklearn.model_selection import GridSearchCV

# Define parameter grid

param_grid = {

'n_estimators': [50, 100, 150],

'learning_rate': [0.01, 0.1, 0.2],

'max_depth': [3, 5, 7]

}

# Initialize GridSearchCV

grid_search = GridSearchCV(GradientBoostingClassifier(random_state=42), param_grid, cv=5, n_jobs=-1)

grid_search.fit(X_train, y_train)

# Best parameters

print("Best Parameters:", grid_search.best_params_)

# Evaluate best model

best_gb = grid_search.best_estimator_

y_pred_best = best_gb.predict(X_test)

print("Best Model Accuracy:", accuracy_score(y_test, y_pred_best))

Key Takeaways

- Gradient Boosting builds models sequentially, correcting previous errors.

- Works well for classification (Iris dataset) and regression tasks.

- Uses weak learners (decision trees) and boosts their performance.

- Feature Importance helps identify key variables.

- Hyperparameter Tuning improves accuracy with GridSearchCV.

- Achieved 100% accuracy on the Iris dataset (small dataset, may overfit).

Next Blog- Python Implementation of Gradient Boosting for Regression

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)