Decision Tree in Machine Learning

Decision trees are a well-regarded method in machine learning that serve both regression and classification objectives. Their simplicity in understanding, interpretation, and execution makes them an excellent choice for novices entering the realm of machine learning. This extensive guide will delve into every facet of the decision tree algorithm, encompassing its operational principles, various types of decision trees, the procedure for constructing them, as well as methods to assess and enhance their performance.

In this article, you will delve into decision trees within the context of machine learning. The fundamental concept involves classifying data using the decision tree approach. For a decision tree classifier to operate effectively, complex decisions are broken down into simpler ones. For instance, an example of a decision tree could determine whether an individual is likely to buy a car based on their age and income. The insights shared by Analytics Vidhya on decision tree analytics provide in-depth explanations. Grasping these examples and the decision tree algorithm in machine learning can significantly sharpen your analytical skills.

Throughout this article, you will uncover the basics of the decision tree machine learning framework, explore diverse examples of decision trees, comprehend how the decision tree algorithm functions, and investigate practical decision tree scenarios with solutions to deepen your grasp of this influential tool.

Essential Elements of a Decision Tree:

- Root Node: The foremost node that embodies the complete dataset and serves as the initial point for division.

- Internal Nodes: Nodes that signify a characteristic or attribute of the dataset, where choices are made based on particular criteria.

- Branches: Pathways that link nodes and illustrate the results of decisions or conditions.

- Leaf Nodes: The concluding nodes that deliver the ultimate prediction or class designation.

- Splitting: The act of partitioning a node into two or more subnodes based on a chosen decision criterion. This includes picking a feature and a threshold to form data subsets.

- Parent Node: A node that undergoes division to produce child nodes. It is the original node from which a division initiates.

- Child Node: Nodes that emerge as a consequence of a split from a parent node.

- Decision Criterion: The guideline or condition utilized to ascertain how the data ought to be separated at a decision node. This involves evaluating feature values against a threshold.

- Pruning: The technique of eliminating branches or nodes from a decision tree to enhance its generalization and avert overfitting.

Benefits:

- Straightforward to Grasp: Understandable, even for those without a technical background.

- Complex Interactions: Capable of modeling intricate relationships between variables.

- Variable Selection: Automatically identifies the most significant variables during the partitioning process.

Disadvantages:

- Overfitting: Deep trees can overfit the training data.

- Unstable: Small changes in data can lead to a completely different tree.

- Bias Towards Categorical Data: Tends to work better with categorical variables than continuous ones.

How Decision Tree is formed?

A Decision Tree is formed through the following steps:

- Root Node Creation: Start with the entire dataset and select the best feature to split based on a criterion (e.g., Information Gain, Gini Index, etc.).

- Splitting: Divide the dataset into subsets based on the chosen feature's values.

- Recursive Partitioning: Repeat the splitting process for each subset, creating child nodes, until a stopping condition is met:

- All data points belong to one class.

- Maximum depth of the tree is reached.

- Minimum samples per leaf are satisfied.

- Leaf Nodes: Assign outcomes (class labels or values) to the terminal nodes.

- Pruning (Optional): Simplify the tree by removing less significant branches to prevent overfitting.

Why Decision Tree?

- Easy to Interpret: The tree-like structure is simple to understand and visualize.

- Handles Both Tasks: Works well for both classification and regression problems.

- No Feature Scaling Required: Unlike algorithms like SVM or KNN, decision trees don’t need data normalization or standardization.

- Captures Nonlinear Relationships: Can model complex relationships between features and target variables.

- Automatic Feature Selection: Identifies the most important features during splitting.

- Versatile: Can handle both numerical and categorical data effectively.

Entropy

Entropy is a measure of impurity or disorder in a dataset. It quantifies the uncertainty in predicting the class label of a randomly chosen data point. A dataset with all samples belonging to the same class has zero entropy (completely pure), while a dataset with an equal mix of classes has maximum entropy (completely impure).

How Do Decision Trees Use Entropy?

Entropy measures the impurity or randomness in the data. It helps to decide which feature to split on. Lower entropy means the dataset is more pure.

Steps:

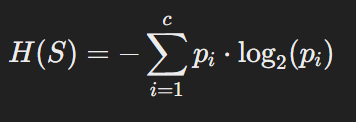

Entropy Formula:

For a dataset with ccc classes, entropy H is given by:

- where pi is the proportion of data points belonging to class i.

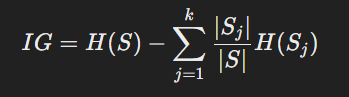

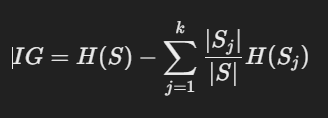

Information Gain (IG):

Information Gain is the reduction in entropy achieved after splitting on a feature.

- S: Original dataset.

- Sj: Subsets after splitting.

- ∣Sj∣/∣|S|: Weighted proportion of the subset.

Feature Selection:

- Calculate the entropy for the dataset.

- Compute the entropy for each possible split of a feature.

- The feature with the highest Information Gain is chosen for the split.

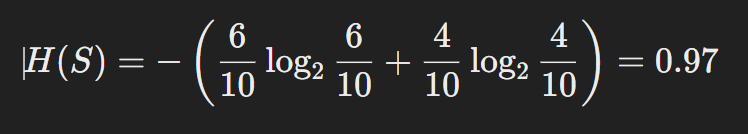

Example:

If a dataset has 10 samples, where 6 belong to class A and 4 to class B:

After splitting on a feature, calculate the weighted entropy of the subsets and find the Information Gain to decide the best split.

How does the Decision Tree algorithm Work?

The Decision Tree Algorithm works by repeatedly splitting the dataset into subsets based on the most significant feature at each step. The goal is to create a tree structure where each path represents a decision rule, and the leaf nodes provide predictions. Here's a step-by-step explanation:

Step 1: Start with the Root Node

- The root node represents the entire dataset.

- The algorithm evaluates all features to determine which one provides the best split (based on criteria like Information Gain or Gini Index).

Step 2: Calculate the Best Split

- For each feature, the algorithm checks all possible thresholds (for numerical features) or categories (for categorical features).

- It evaluates how well each split separates the data into pure subsets.

Criteria for Splitting:

- Information Gain (IG):

- Information Gain (IG) is a key concept used in decision trees, specifically for classification tasks. It measures the reduction in uncertainty or entropy achieved by partitioning the data based on a particular feature. In other words, Information Gain tells us how much information a feature provides about the target variable.

- Measures the reduction in entropy after a split.

- Higher IG means a better split.

Formula:

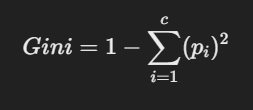

- Gini Index:

- The Gini Index, also known as the Gini Impurity or Gini Coefficient, is a measure of how often a randomly chosen element from a set would be incorrectly classified if it were randomly labeled according to the distribution of labels in the set. It is widely used in decision trees to determine how to split data at each node.

- Measures impurity in a subset.

- Lower Gini Index indicates better splits.

Formula:

Step 3: Split the Data

- The feature with the highest Information Gain or lowest Gini Index is chosen for the split.

- The dataset is divided into subsets based on this feature.

Step 4: Repeat the Process (Recursion)

- For each subset created, repeat Steps 2 and 3.

- Each subset becomes a child node, and the algorithm continues to split until:

- Stopping Conditions are met:

- Maximum tree depth is reached.

- Minimum samples per leaf are satisfied.

- Subsets are pure (all samples belong to one class).

- The tree reaches its leaf nodes

- Stopping Conditions are met:

Step 5: Generate Predictions

- For a new data instance, the algorithm navigates through the tree according to the feature values, adhering to the decision criteria established at each node.

- The resulting prediction corresponds to the class label or regression value found at the relevant leaf node.

Example:

Suppose we have a dataset of weather conditions for playing tennis:

| Outlook | Temperature | Humidity | Windy | Play Tennis |

| Sunny | Hot | High | False | NO |

| Overcast | Hot | High | False | YES |

| Rainy | Mild | High | False | YES |

| Rainy | Cool | Normal | False | YES |

Step-by-Step Process:

- Root Node: Evaluate all features (e.g., Outlook, Humidity) to find the best split.

- Split on Outlook with the highest Information Gain.

- Create Subsets:

- Subset 1: Outlook = Sunny.

- Subset 2: Outlook = Overcast.

- Subset 3: Outlook = Rainy.

- Repeat Splitting:

- For each subset, find the next best feature to split on.

- Stop:

- Stop splitting when subsets contain only one class or meet stopping conditions.

Key Takeaways:

- Decision Trees Overview:

- Decision Trees are simple yet powerful machine learning algorithms used for both classification and regression tasks.

- They are easy to understand, interpret, and implement, making them ideal for beginners.

- Basic Structure:

- A Decision Tree consists of a Root Node (entire dataset), Internal Nodes (decisions based on features), Branches (decision paths), and Leaf Nodes (final predictions).

- Important Components:

- Root Node: The starting point, representing the whole dataset.

- Internal Nodes: Nodes representing attributes or features of the data.

- Leaf Nodes: Endpoints representing the final decision or classification.

- Splitting: The process of dividing the data into subsets based on decision criteria.

- Pruning: The process of removing branches to avoid overfitting and improve generalization.

- Key Criteria:

- Entropy: Measures the impurity or disorder in the dataset. A dataset with the same class has zero entropy.

- Information Gain (IG): Measures the reduction in entropy after a split. A feature with high Information Gain is ideal for splitting.

- Gini Index: Measures the impurity in the dataset. The feature with the lowest Gini Index is selected for splitting.

- Benefits:

- Easy to Understand and Visualize: Can be visualized as a flowchart.

- Handles Both Classification and Regression: Applicable to both tasks.

- No Feature Scaling Needed: Unlike some other models, Decision Trees do not require data normalization or standardization.

- Automatic Feature Selection: The algorithm automatically selects important features.

- Disadvantages:

- Overfitting: Decision Trees can easily overfit if they become too deep.

- Instability: Small changes in data can lead to a completely different tree.

- Bias Towards Categorical Data: May perform better with categorical data compared to continuous data.

- Algorithm Steps:

- Start with the root node and evaluate all features to find the best one to split.

- Split the data into subsets based on the best feature.

- Recursively repeat the process for each subset until stopping criteria are met (pure subsets, maximum tree depth, etc.).

- The tree is then used for predictions, where each leaf node provides the final prediction.

- Practical Example:

- The decision tree for predicting whether to play tennis based on weather conditions (e.g., Outlook, Temperature, Humidity) involves splitting the data based on features like Outlook (Sunny, Overcast, Rainy) and continuing the process until subsets are pure (all instances of one class).

Next Blog- Python Implementation of Decision Trees Using Entropy

.png)

.png)

.png)

.png)

.png)

.png)

Algorithm for Machine Learning.jpg)

Algorithm.jpg)

.png)

.png)

.png)

.png)

.png)

.png)