Python Implementation of Polynomial Regression Model

Step 1: Import the Necessary Libraries

First, we import the required libraries.

# Suppress warnings

import warnings

warnings.filterwarnings('ignore')

# Import numpy and pandas for data handling

import numpy as np

import pandas as pd

# Data visualization

import matplotlib.pyplot as plt

import seaborn as sns

# Scikit-learn libraries for preprocessing and modeling

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import PolynomialFeatures, StandardScaler

from sklearn.linear_model import LinearRegression

from sklearn.metrics import r2_score, mean_absolute_error

Step 2: Generate or Load the Dataset

We either load a dataset or generate one for simplicity. Here, we simulate a dataset for polynomial regression:

# Generating a dataset

np.random.seed(0)

X = np.linspace(-5, 5, 300).reshape(-1, 1) # Generating 300 points between -5 and 5

y = X**3 + 2*X**2 - 3*X + np.random.normal(0, 3, size=X.shape) # Cubic equation with noise

# Convert the data to a DataFrame

data = pd.DataFrame({'X': X.flatten(), 'y': y.flatten()})

data.head()Output:

X y

0 -5.000000 -54.707843

1 -4.966555 -57.074902

2 -4.933110 -53.643391

3 -4.899666 -48.189790

4 -4.866221 -47.671070

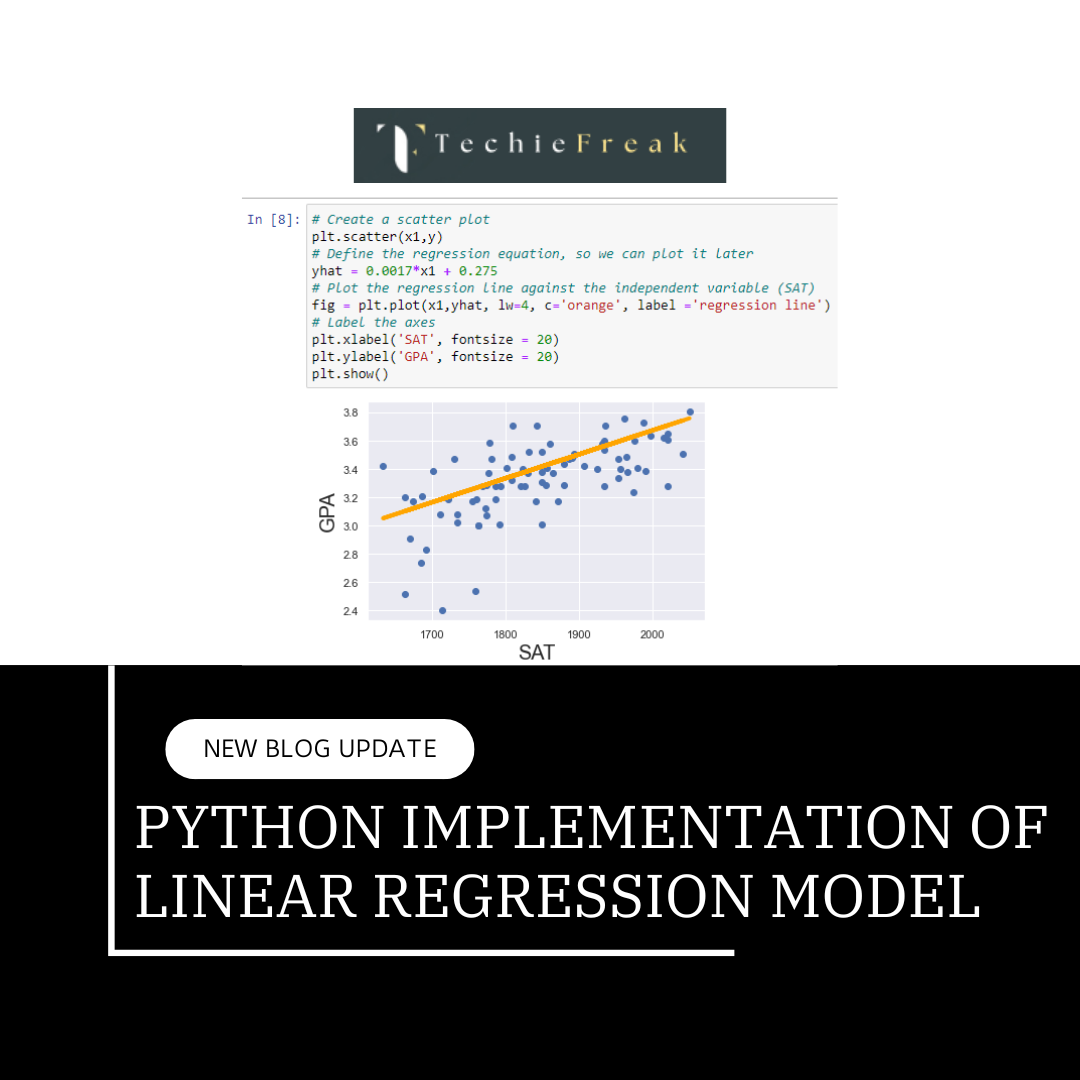

Step 3: Visualize the Data

Visualizing the relationship between X and y.

plt.scatter(data['X'], data['y'], color='blue', label='Data')

plt.title("Scatter Plot of X vs y")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Output:

A scatter plot showing the relationship between X and y.

Step 4: Train-Test Split

Split the data into training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(data[['X']], data['y'], test_size=0.2, random_state=42)

print("Training set size:", X_train.shape[0])

print("Testing set size:", X_test.shape[0])

Output:

Training set size: 240

Testing set size: 60

Step 5: Apply Polynomial Features

Use PolynomialFeatures to transform the data into polynomial features.

# Create polynomial features of degree 3

poly = PolynomialFeatures(degree=3, include_bias=False)

# Transform training and testing data

X_train_poly = poly.fit_transform(X_train)

X_test_poly = poly.transform(X_test)

# Display the transformed features for training data

pd.DataFrame(X_train_poly, columns=["X", "X^2", "X^3"]).head()

Output:

X X^2 X^3

0 2.759197 7.613170 21.006238

1 -3.026756 9.161251 -27.728870

2 -4.799331 23.033579 -110.545772

3 1.187291 1.409660 1.673676

4 0.785953 0.617722 0.485501

Step 6: Scale the Features

Scaling ensures all features contribute equally to the model.

# Initialize the scaler

scaler = StandardScaler()

# Scale polynomial features

X_train_poly_scaled = scaler.fit_transform(X_train_poly)

X_test_poly_scaled = scaler.transform(X_test_poly)

print("First 5 rows of scaled training data:")

print(X_train_poly_scaled[:5])

Output:

The scaled values of the polynomial features.

First 5 rows of scaled training data:

[[ 0.95793709 -0.09611154 0.44302559]

[-1.04566716 0.10880007 -0.57928867]

[-1.65948811 1.94500941 -2.31653526]

[ 0.4136053 -0.91723854 0.03748728]

[ 0.27462697 -1.02206326 0.01256298]]Step 7: Fit the Polynomial Regression Model

Train a linear regression model on the polynomial features.

# Train the model

lr_model = LinearRegression()

lr_model.fit(X_train_poly_scaled, y_train)

# Print the coefficients

print("Model Coefficients:", lr_model.coef_)

print("Intercept:", lr_model.intercept_)

Output:

Model Coefficients: [-9.09352288 15.36580183 47.73588139]

Intercept: 16.747637303669734

Step 8: Evaluate the Model

Predict on the test set and evaluate the model using R² and Mean Absolute Error (MAE).

# Make predictions

y_pred = lr_model.predict(X_test_poly_scaled)

# Evaluate the model

r2 = r2_score(y_test, y_pred)

mae = mean_absolute_error(y_test, y_pred)

print("R² Score:", r2)

print("Mean Absolute Error:", mae)

Output:

R² Score: 0.9936906340058268

Mean Absolute Error: 2.9373465980999725

Step 9: Visualize Predictions

Plot the original data, true values, and the model's predictions.

# Visualizing the predictions

plt.scatter(X_test, y_test, color='blue', label='True Values')

plt.scatter(X_test, y_pred, color='red', label='Predicted Values')

plt.title("True vs Predicted Values")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Output:

A scatter plot showing Model prediction.

Step 10: Visualize the Polynomial Curve

Generate predictions over the entire range of X to visualize the polynomial curve.

# Generate predictions for the entire range of X

X_range = np.linspace(X.min(), X.max(), 500).reshape(-1, 1)

X_range_poly = scaler.transform(poly.transform(X_range))

y_range_pred = lr_model.predict(X_range_poly)

# Plot the polynomial curve

plt.scatter(data['X'], data['y'], color='blue', label='Data')

plt.plot(X_range, y_range_pred, color='red', label='Polynomial Fit')

plt.title("Polynomial Regression Curve")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Summary of Outputs:

- Scatter Plot of X vs y: Visualizes raw data distribution.

- Training and Testing Split Sizes: Confirms the split ratio.

- Transformed Polynomial Features: Displays created polynomial terms.

- Scaled Features: Shows scaled polynomial features.

- Model Coefficients and Intercept: Highlights learned weights.

- R² and MAE Scores: Evaluates model accuracy and error.

- Prediction Visualization: Compares true and predicted values.

- Polynomial Curve: Illustrates the fit of the polynomial model.

This completes the implementation of Polynomial Regression in Python!

.png)

.png)

.png)

.png)

.png)

.png)

Algorithm for Machine Learning.jpg)

Algorithm.jpg)

.png)

.png)

.png)

.png)

.png)

.png)