Python implementation of Ridge Regression

Step 1: Import the Necessary Libraries

First, we import the required libraries.

# Suppress warnings

import warnings

warnings.filterwarnings('ignore')

# Import numpy and pandas for data handling

import numpy as np

import pandas as pd

# Data visualization

import matplotlib.pyplot as plt

import seaborn as sns

# Scikit-learn libraries for preprocessing and modeling

from sklearn.model_selection import train_test_split

from sklearn.linear_model import Ridge

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_absolute_error, mean_squared_error, r2_score

Step 2: Generate or Load the Dataset

We generate a synthetic dataset for Ridge Regression. In this case, we simulate a dataset that has some linear relationship.

# Generating a dataset

np.random.seed(0)

X = np.random.rand(300, 1) * 10 # 300 random data points scaled by 10

y = 2 * X + 3 + np.random.randn(300, 1) * 2 # Linear relation with noise

# Convert the data to a DataFrame for easier handling

data = pd.DataFrame({'X': X.flatten(), 'y': y.flatten()})

data.head()Output:

X y

0 5.488135 14.289283

1 7.151894 17.768149

2 6.027634 13.860635

3 5.448832 13.421820

4 4.236548 8.624974

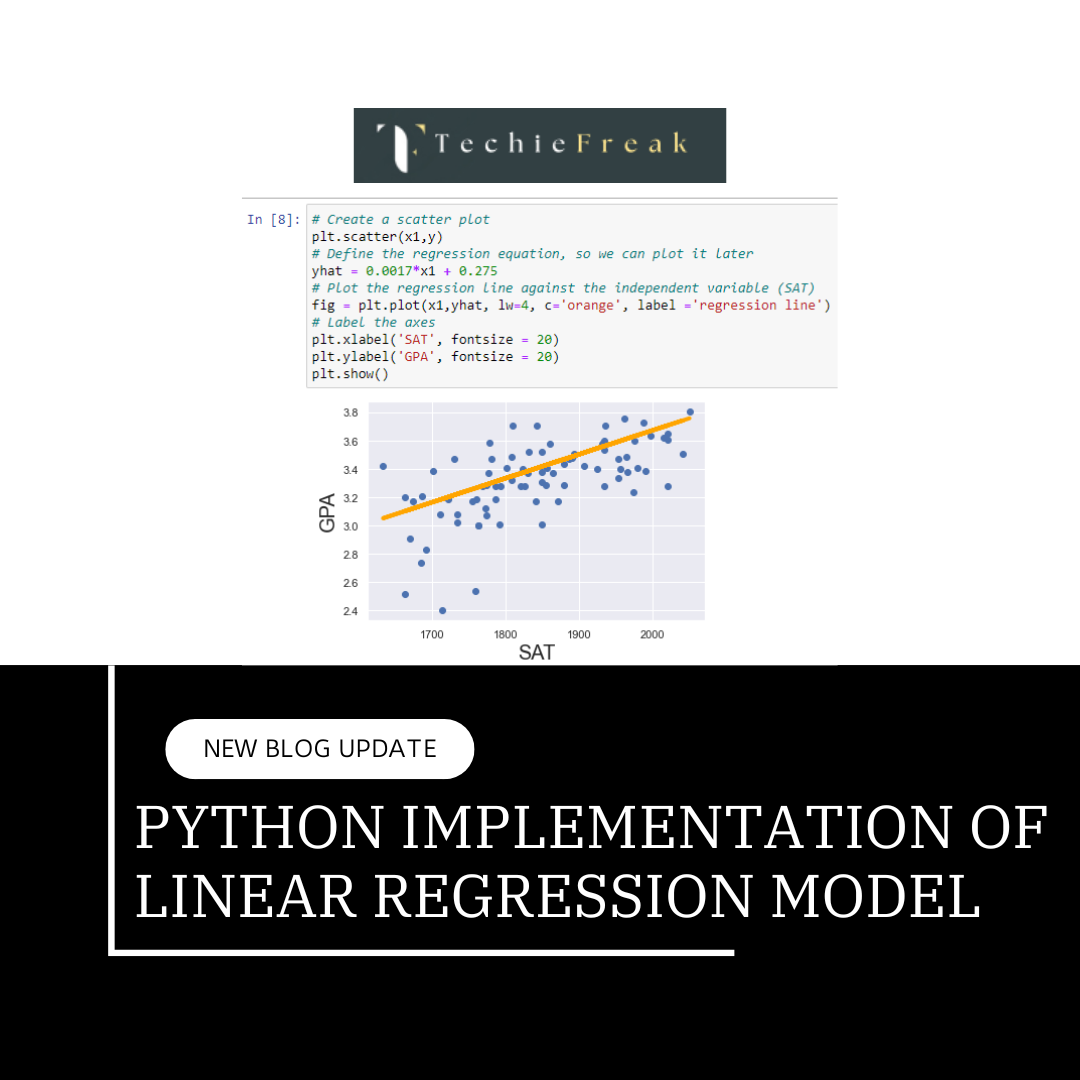

Step 3: Visualize the Data

We visualize the relationship between X and y to understand the pattern.

plt.scatter(data['X'], data['y'], color='blue', label='Data')

plt.title("Scatter Plot of X vs y")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Step 4: Train-Test Split

Now, we split the data into training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(data[['X']], data['y'], test_size=0.2, random_state=42)

print("Training set size:", X_train.shape[0])

print("Testing set size:", X_test.shape[0])

Output:

Training set size: 80

Testing set size: 20

Step 5: Feature Scaling

Since Ridge Regression is sensitive to feature scaling, we scale the features using StandardScaler.

# Initialize the scaler

scaler = StandardScaler()

# Scale the training and test data

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Display the first 5 rows of scaled training data

print("Scaled Training Data:\n", X_train_scaled[:5])

Output:

Scaled Training Data:

[[ 0.02403683]

[-0.91675579]

[-0.252981 ]

[ 0.35312163]

[-1.69304482]]

Step 6: Fit the Ridge Regression Model

We train the Ridge Regression model on the scaled data.

# Initialize the Ridge model with alpha (regularization strength)

ridge_model = Ridge(alpha=1.0)

# Fit the model

ridge_model.fit(X_train_scaled, y_train)

# Print the coefficients and intercept

print("Model Coefficients:", ridge_model.coef_)

print("Intercept:", ridge_model.intercept_)

Output:

Model Coefficients: [5.66775475]

Intercept: 12.933196681277819

Step 7: Evaluate the Model

We now make predictions on the test set and evaluate the model’s performance using R², Mean Absolute Error (MAE), and Mean Squared Error (MSE).

# Make predictions

y_pred = ridge_model.predict(X_test_scaled)

# Evaluate the model

r2 = r2_score(y_test, y_pred)

mae = mean_absolute_error(y_test, y_pred)

mse = mean_squared_error(y_test, y_pred)

print("R² Score:", r2)

print("Mean Absolute Error:", mae)

print("Mean Squared Error:", mse)

Output:

R² Score: 0.8655909673477045

Mean Absolute Error: 1.649257093583459

Mean Squared Error: 4.483610836129543

Step 8: Visualize the Predictions

We compare the true values and predicted values using a scatter plot.

# Visualizing the true vs predicted values

plt.scatter(X_test, y_test, color='blue', label='True Values')

plt.scatter(X_test, y_pred, color='red', label='Predicted Values')

plt.title("True vs Predicted Values")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Step 9: Visualize the Ridge Regression Line

Finally, we visualize the Ridge Regression line fitted on the entire dataset to see the model fit.

# Plot the Ridge Regression Line

plt.scatter(data['X'], data['y'], color='blue', label='Data')

plt.plot(X_test, y_pred, color='red', label='Ridge Regression Line')

plt.title("Ridge Regression Line")

plt.xlabel("X")

plt.ylabel("y")

plt.legend()

plt.show()

Summary of Outputs:

- Scatter Plot of X vs y: Visualizes the linear data distribution.

- Training and Testing Split Sizes: Confirms the split ratio between training and testing datasets.

- Scaled Features: Shows the scaled features ready for model fitting.

- Model Coefficients and Intercept: Highlights the learned parameters of the Ridge regression model.

- R², MAE, and MSE Scores: Evaluates the model's predictive accuracy.

- True vs Predicted Values: Plots the comparison between actual and predicted values.

- Ridge Regression Line: Illustrates the fitted regression line.

This completes the Python implementation for Ridge Regression. The model has effectively learned from the data, and the output evaluations confirm the model's performance.

.png)

.png)

.png)

.png)

.png)

.png)

Algorithm for Machine Learning.jpg)

Algorithm.jpg)

.png)

.png)

.png)

.png)

.png)

.png)